There are various implementations of in-place merge sort around the web, including my own, and various papers describing procedures for in-place merging. Then a few days ago, while researching Smoothsort, I stumbled upon a page titled Smoothsort Demystified which gives a nicely detailed explanation of the sorting algorithm. I haven’t seen such a tutorial for in-place merge sort. At best, I’ve only seen vague explanations or descriptions which use terminology that many people are unlikely to understand. Even Wikipedia only gives a couple details of in-place merging without any adequate explanation or demonstration. I figured, I know a technique for in-place merge sort and how to implement it, so I’ll take a stab at writing my own tutorial.

Update 8/31/14: It seems that my initial understanding of “rotations” was naive at best. I plan to revise this post to describe three in-place merging techniques: insertion -> range swaps -> rotations. Also, thanks to BonzaiThePenguin, I’m now aware of an in-place stable sorting algorithm called Block sort (and another link) which runs in O(n log n) time. The algorithm seems highly complex and I haven’t had time to study it yet.

Before reading any further, it is highly recommended that you already understand merge sort and preferably have even implemented it yourself. If not, there are more than enough resources online that will adequately teach you the merge sort algorithm, including several YouTube videos. It would be best to familiarize yourself with the algorithm before attempting to follow my tutorial.

What do you mean by “in-place”? A standard merge sort requires you to allocate a buffer equal in length to the input being sorted. For example, if you wish to sort an array with 1000 elements, it is necessary to allocate an additional scratch array with 1000 elements as a space for merging elements before copying them back to the input array. This is why merge sort has O(n) space complexity. An in-place sorting algorithm doesn’t require allocating any additional memory for sorting. Several algorithms meet this requirement, including insertion sort and heap sort which have O(1) space complexity. However, in-place merge sort has O(log n) space complexity. This is because, like quick sort, it is a recursive function which requires pushing elements onto the stack.

How does in-place merging affect the performance and complexity of merge sort? A naive implementation may be 2-3x slower than a standard merge sort. In-place merging only requires a minor increase in comparisons. The worst effect on performance occurs due to the significant increase in reads and writes (there is lots of swapping going on). I will explain a couple of optimizations towards the end which can significantly increase the performance of the algorithm.

Is in-place merge sort stable? Yes! As long as it is implemented correctly, in-place merge sort will preserve the stability of merge sort.

Why would I want to use an in-place merge sort? The truth is, you probably wouldn’t. The only reason I can fathom is if you’re working with embedded systems with very limited memory. In the vast majority of cases, merge sort or Timsort would be a better choice. Otherwise, this is merely for those who are interested or graduate students who need a topic for their thesis.

Is this applicable to Timsort? Only partially. It is perfectly possible to implement an in-place natural merge sort. However, Timsort is an adaptive sort and has a galloping mode which can significantly reduce the number of comparisons on partially sorted data. If you were to implement an in-place Timsort, it would be impossible to implement galloping mode as well… at least using the technique I describe in this post.

Rotations

Before I move forward, I should define what is meant by rotations as they are used heavily in the merging process. Actually, rotations are used in a limited form here in a way that is functionally equivalent to std::swap_ranges in C++ (aka block swap). First, I’ll explain how rotations are used in the algorithm, then I’ll explain what rotations really are and why it’s still a proper usage of the term.

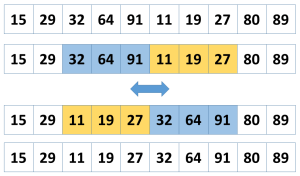

For in-place merging, rotations are used to swap the elements in two adjacent sublists which are equal in length. As you will soon see, this technique is used to move a large number of elements at once.

That is the basic operation of in-place merging. So what are rotations anyways? To rotate elements in a sublist means to shift the elements some number of places to right and elements at the end wrap around to the beginning (and vice versa). So if you have the list [1,2,3,4,5], shifting it one place to the right will give you [5,1,2,3,4].

The fact that the two ranges being swapped are adjacent is important in calling this operation a rotation. If the length of the sublist being rotated is an even number, then shifting the elements half that distance results in the same operation as swap_ranges above. In the following graphic, I shift the elements one place to the right three times, achieving the same result as before.

That’s one way to look at rotations. The way that std::rotate in C++ is portrayed is different. Rather than shifting elements N places to the left or right, std::rotate rotates the elements on a pivot indicated by some middle element, such that the middle element becomes the new first element. Following that definition, if the middle element is M, then shifting the elements M places to the left achieves the same result.

In-Place Merging

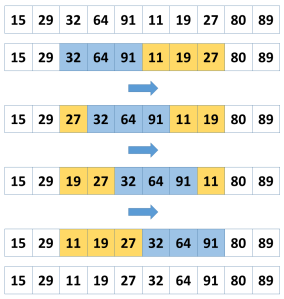

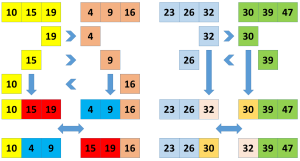

Now that I’ve explained rotations in too much detail, I can explain how to use them for in-place merging. Let us define two sorted sublists, A and B, that we want to merge in-place.

The trick is to swap the largest elements in A for the smallest elements in B. A linear search can find the range of elements to rotate. Start from the middle and extend outwards, until you find an element in A which is less than an element in B. Rotate the elements between these bounds.

That’s the basic operation. However, something special happened. Of the two ranges, the smallest elements are in A and the largest elements are in B, albeit not in order. This means you can continue to merge A and B independently, so all you’re left with is two smaller merges. This also means that in-place merging is a recursive function in itself.

By recursively applying rotations in this manner, eventually you’ll merge the two ranges. Let’s apply this process once more and see what happens:

Just like that, the right-hand side is already sorted and the left-hand side is almost sorted. A couple more rotations on the left-hand side and the merge procedure would be complete.

In essence, each rotation moves the elements that much closer to their final location. When the sublists are large, rotations can move thousands of elements at a time. But as the sublists become smaller and smaller, rotations become less efficient. When the sublists become small enough, there are a couple of techniques you could apply that would be more efficient than applying several more rotations.

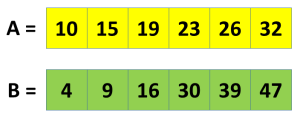

Binary Search

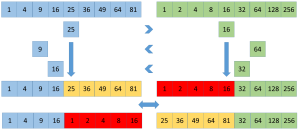

In the previous section, I stated that a linear search can be used to find the range of elements to rotate. While that is fine for small sublists, it would be very impractical for large sublists with thousands or millions of elements. Using a linear search, it may be necessary to compare thousands of elements to find the bounds of the range to rotate.

In general, a better approach is to use a binary search which is guaranteed to find the bounds within O(log n) comparisons. A binary search can be tricky to implement as it’s easy to get stuck in an infinite loop. However, the end result is worth it as it will find you the bounds much quicker and with far fewer comparisons.

Start half-way from the center and move the search inwards or outwards, depending on the result of the comparison. It’s important to only compare elements which are equidistant from the center!

Optimizing Using Insertions

When the sublists become small enough, using insertions to move the last few elements into place would be more efficient than using rotations. However, how do you decide whether to use insertions or rotations? What I do is define a threshold for when to switch to insertion.

A well optimized merge sort typically uses insertion sort to sort small sublists before merging anything. Similarly, a well optimized quick sort uses insertion sort once a partition is small enough. By referring to these as examples, I typically use insertion sort on sublists up to 32 elements in length. In a worst-case, this would imply (32+31+30+29+28+…) insertions. This can be calculated directly using the formula (n*(n+1)/2). For 32 elements, this gives us a worst-case of 528 insertions.

Now we need a formula to determine whether the number of insertions required to merge two lists is below this threshold. This is actually simple: just multiply the length of both lists. If one sublist has 10 elements and the other has 15 elements, this gives us a worst-case of (10*15=150) insertions.

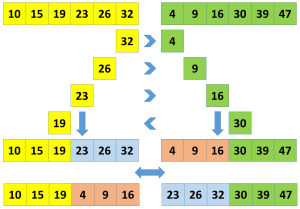

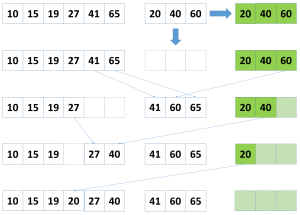

Using a scratch array for merging

By applying rotations, you effectively reduce two large sublists into several smaller sublists. When the sublists become small enough, a very effective optimization is to simply allocate a small buffer to merge the sublists in. This will allow you to greatly reduce the number of rotations and is far more efficient than using insertions.

This can be further optimized as well. While most implementations of merge sort have O(n) space complexity, it is trivial to implement it such that it has O(n/2) space complexity and is generally a bit quicker as well. This is the same technique that Timsort uses to achieve O(n/2) worst-case space complexity.

Begin by copying one of the sublists into the buffer. Then simply merge the elements in-place. Depending on which sublist is smaller, it may be necessary to merge the elements in reverse order. In the following graphic, the green cells represent a small buffer which is large enough to store a copy of the right sublist.

This is a great technique to greatly reduce the space complexity while only being marginally slower, albeit technically it wouldn’t be an in-place merge sort anymore. This technique is also applicable to Timsort and allows you to retain some of the benefit of galloping mode. I have implemented my own variant of Timsort with O(n/1024) space complexity.

Summary

In this post, I have demonstrated a practical technique to merge two sublists in place. It has limited applicability in the real world, but is interesting none the less and is a topic of research that has been worthy of publication. When writing this tutorial, I did my best to explain things in simpler terms and to provide some intuition for why this technique works. I simply hope that it was easy to understand and that you learned something new from reading it.

I’m looking for feedback on this article. Please feel free to post any questions or comments here or on Reddit.

You must be logged in to post a comment.